485,000 AI accelerators: Microsoft was Nvidia’s best customer this year 26 comments

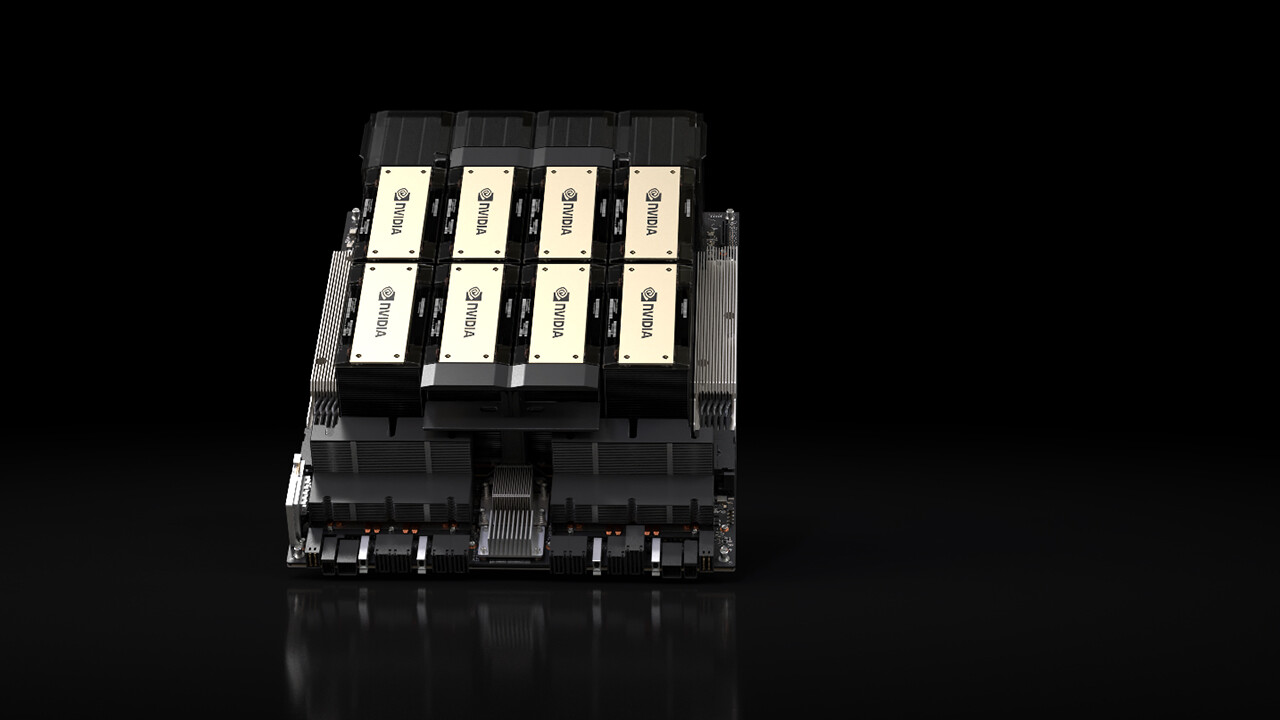

Image: Nvidia

Microsoft was the largest buyer of Nvidia’s Hopper generation AI accelerators this year. This is what emerges from Omdia’s analyzes cited by the Financial Times. As a result, Microsoft acquired about 485,000 AI accelerators from Nvidia this year, more than twice as many as the second-largest customer, ByteDance.

485,000 Hopper generation AI accelerators

The forecast is based on capital expenditures, server shipments and supply chain information disclosed by listed companies. Analysts speculate that Microsoft purchased about 485,000 Nvidia Hopper-generation AI accelerators this year for AI training and inference. Microsoft has thus become by far Nvidia’s best customer in national and international comparison of the year.

China ranks second and third

Chinese companies ByteDance (mainly known for TikTok) and Tencent (social media, e-commerce, advertising) follow in second and third place in the statistics. Both have around 230,000 AI accelerators, which in this case also includes models specifically reserved for China, such as the H20, which, like the A800 and H800, is the result of strict US trade restrictions.

Meta before xAI, Amazon and Google

In the US market, Meta acquired Nvidia’s second Hopper GPU behind Microsoft. A total of 224,000 coins were distributed at Meta this year. According to Omdia, the company behind Facebook, WhatsApp, Instagram and Quest also purchased 173,000 AMD MI300s this year. In this comparison, Microsoft only has 96,000 MI300s and has therefore focused more on Nvidia.

Tesla and xAI, meanwhile, currently rely on 100,000 H100 GPUs for the AI supercomputer called Colossus, which is used to train LLMs like Grok. However, when it was completed in October, Nvidia and xAI announced that they were already working on expanding Colossus to 200,000 and therefore twice as many GPUs.

Custom AI chips compete with Nvidia

Amazon and Google, which also want to implement artificial intelligence using their own custom chips, have purchased approximately 196,000 (Amazon) and 169,000 (Google) Hopper accelerators from Nvidia. Amazon has sold about 1.3 million of its own Trainium and Inferentia chips this year and wants to train the next generation of AI models with rival OpenAI Anthropic, in which Amazon has invested $8 billion. Approximately 1.5 million units of Google’s own TPU and Meta’s MTIA (Meta Training and Inference Accelerator) have been sold each. Omdia reports sales of Microsoft’s Maia at 200,000 units.

An engineer by training, Alexandre shares his knowledge on GPU performance for gaming and creation.

![CB Radio Podcast #96: Our review with perspectives and your DIY PC fails [Notiz]](https://techastuce.com/wp-content/uploads/2024/12/Podcast-CB-Radio-96-Notre-revue-avec-perspectives-et.jpg)