For Rubin and Feynman: SK Hynix, Samsung, and Micron Show HBM4E with up to 64 GB

For Rubin and Feynman: SK Hynix, Samsung, and Micron Show HBM4E with up to 64 GB

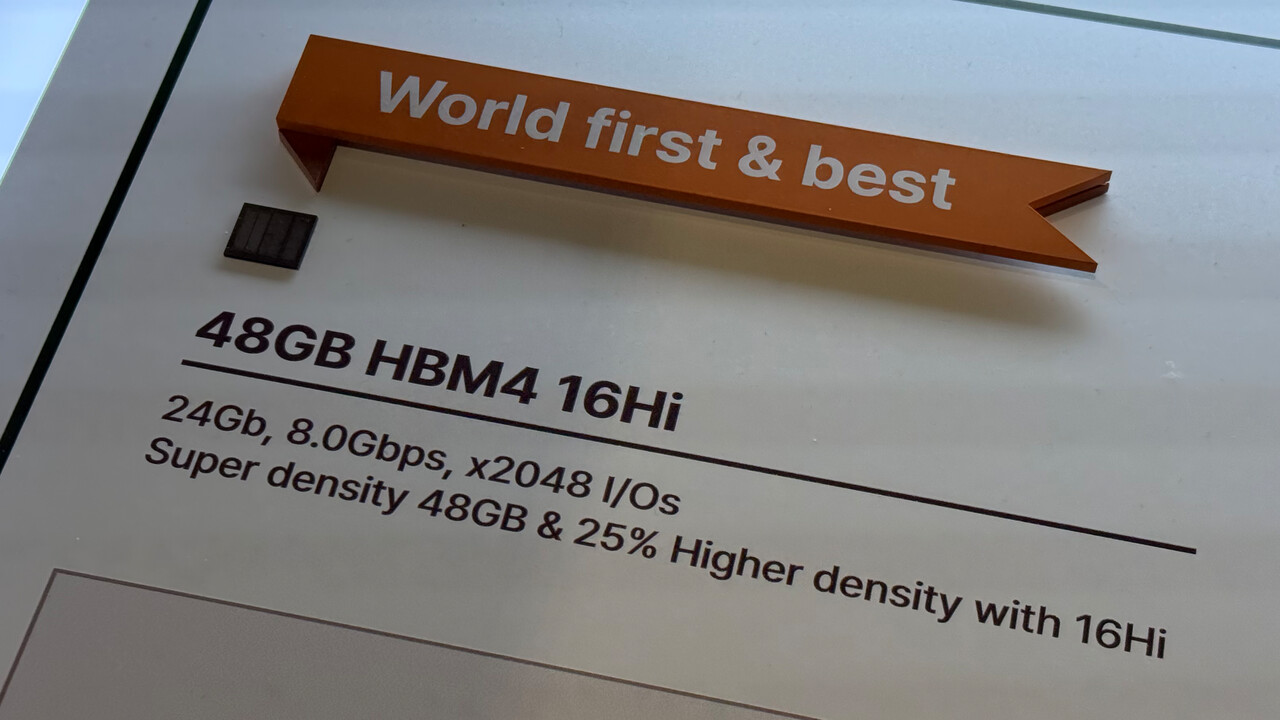

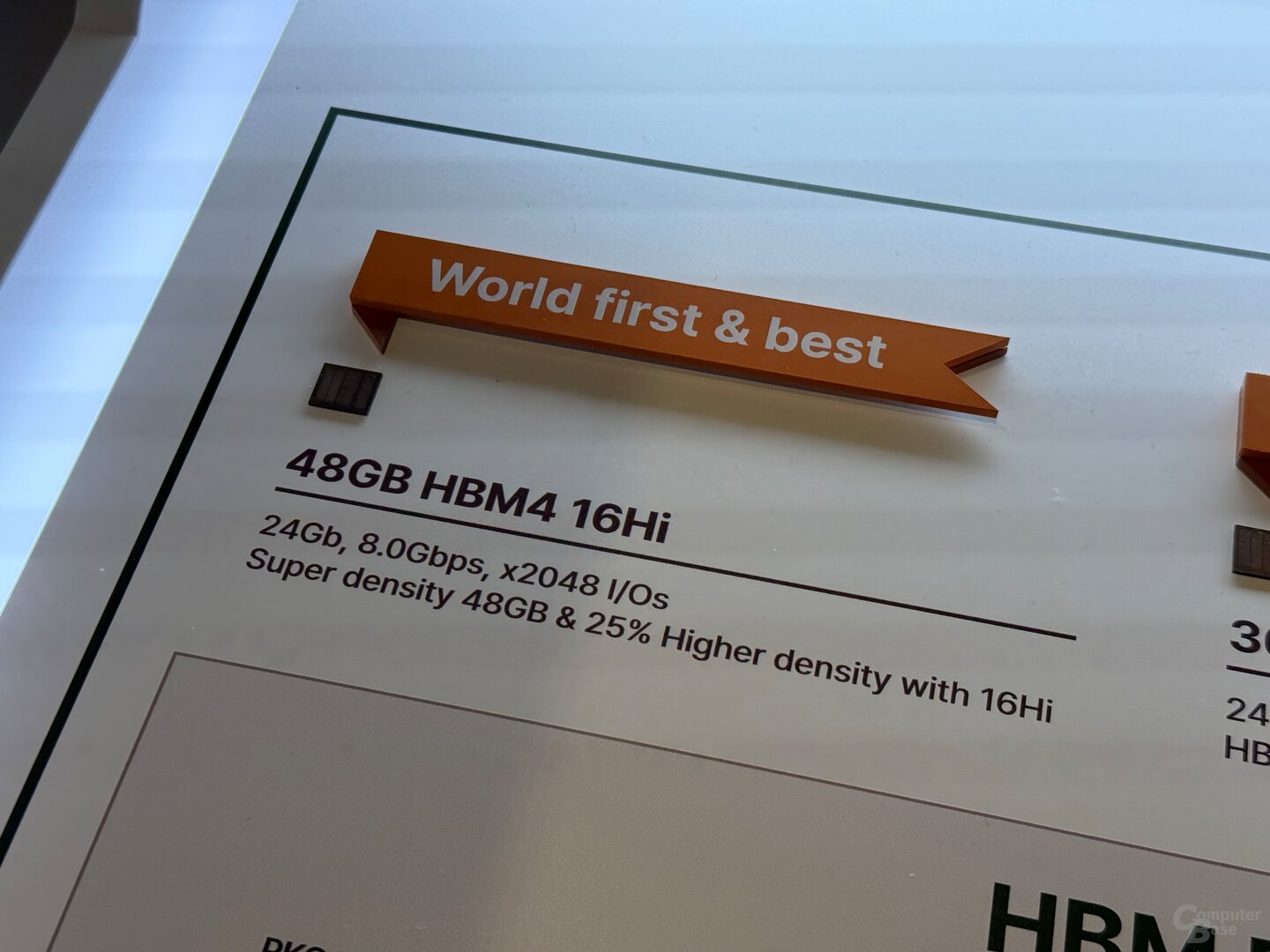

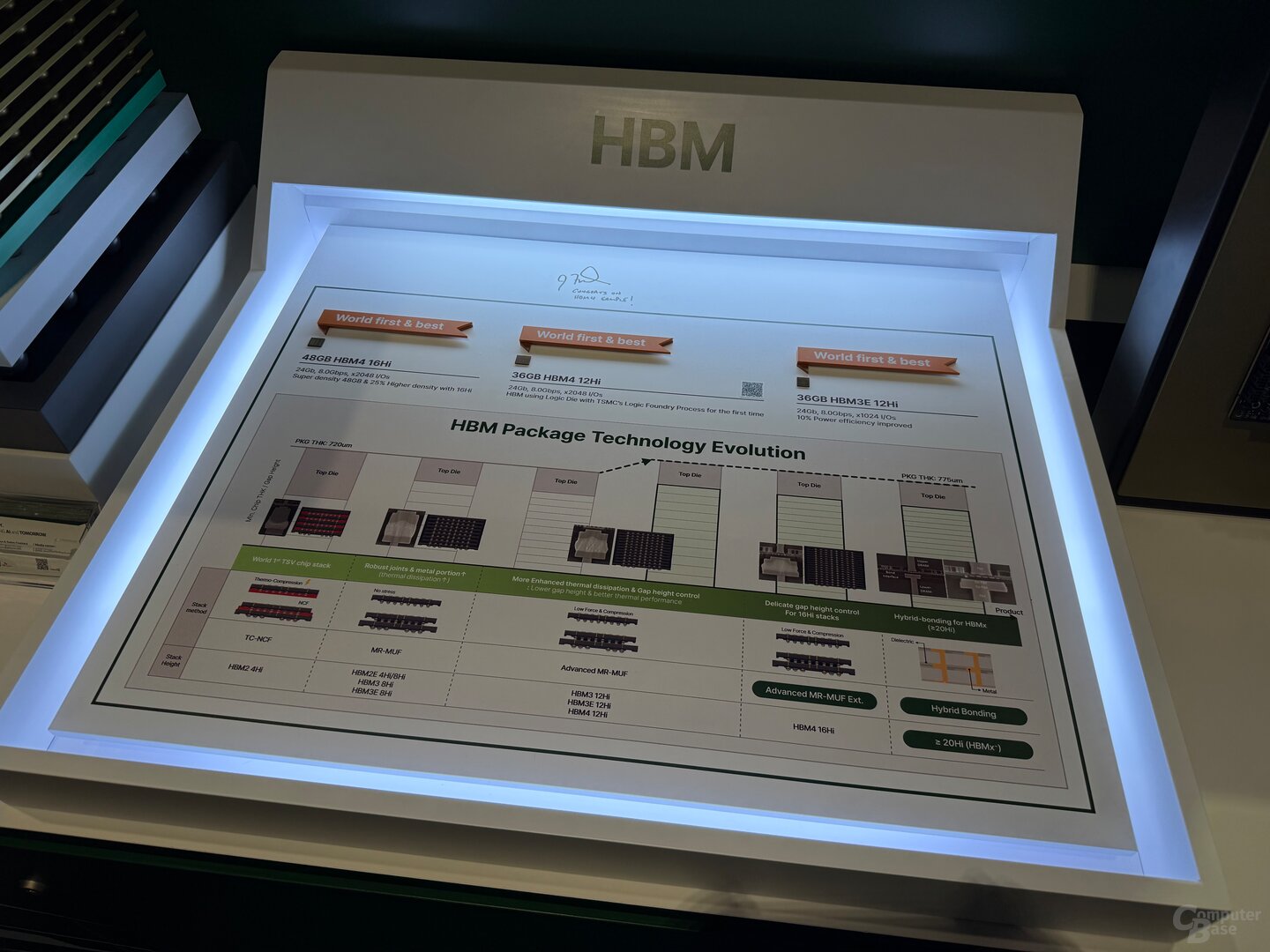

Major memory manufacturers SK Hynix, Samsung, and Micron are in various stages of expansion, including 48 GB HBM4 for GTC 2025. The development is similar across all three; 16x memory will spearhead the future portfolio. But even that’s not enough.

HBM4 by SK Hynix

HBM4 by SK Hynix

HBM4 by SK Hynix

The 12-layer version, below the absolute top and probably a much more common alternative, is “12Hi.” Other manufacturers are also playing here; 36 GB of HBM4 stacks are likely the new standard. Before that, HBM3E will already offer 36 GB in a 12-layer version; all manufacturers are also included here—the latest sales plans for HBM had already stated this. SK Hynix plans and shares this via the press release to be able to ship HBM4 with 12 layers starting at the end of this year—provided orders are available. Rubin’s 288 GB of HBM4 is based precisely on these 36 GB stacks.

The manufacturers then also provide a little information on the possible speeds and bandwidths. SK Hynix claims that 8.0 Gbps is defined for the indicated chips, Samsung speaks of 9.2 Gbps, which should already refer to an expansion step.

64 GB in larger stacks is the future

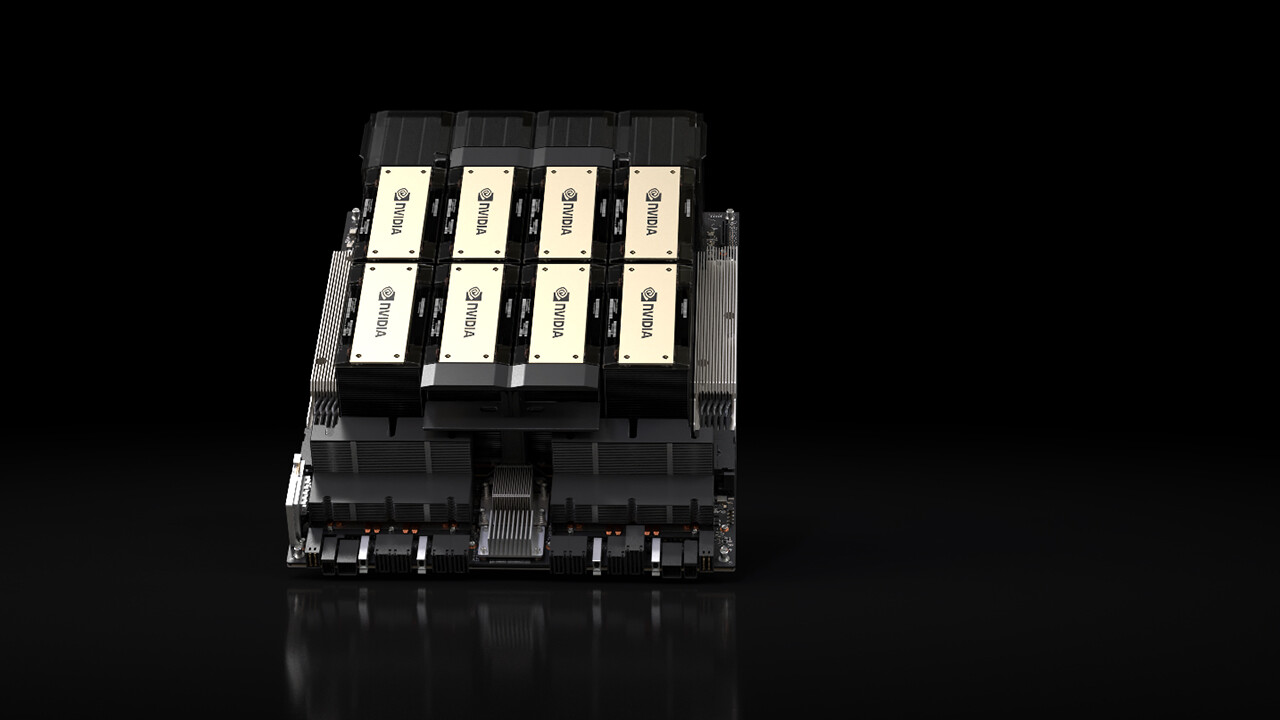

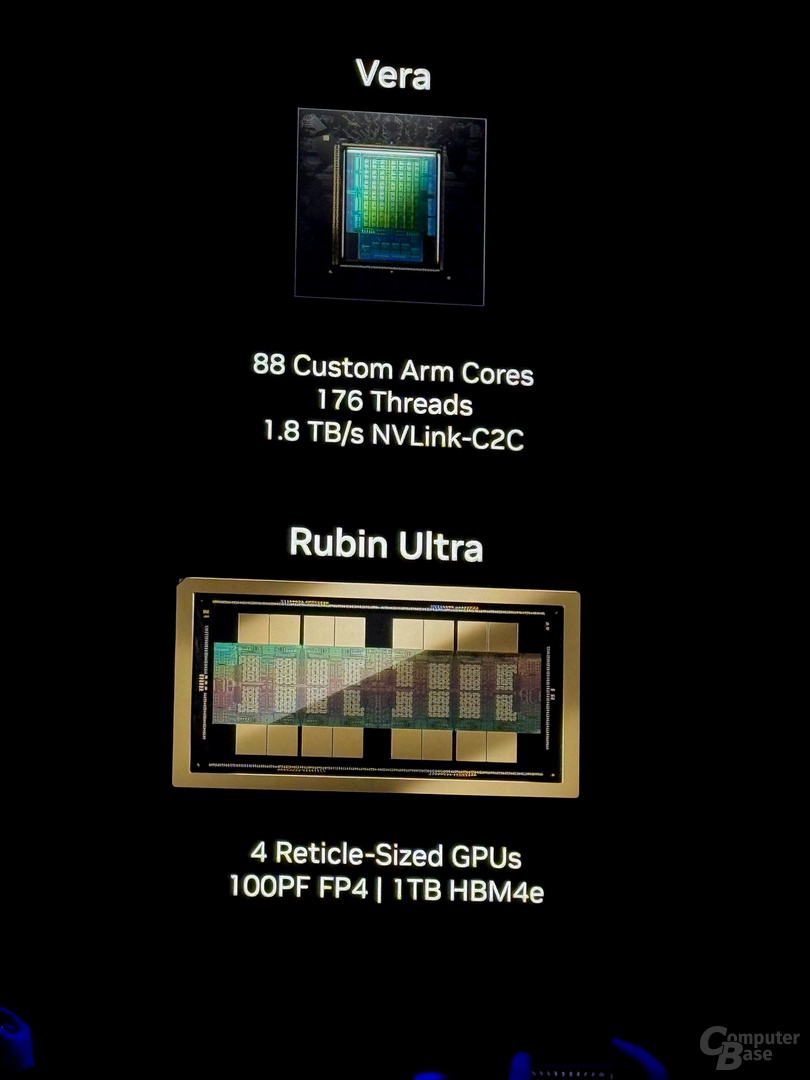

Rubin Ultra

Rubin Ultra

Rubin Ultra

SK Hynix HBM3E and HBM4

SK Hynix HBM3E and HBM4

Techastuce received information about this item from Nvidia at a manufacturer event in San Jose, California. The cost of arrival, departure, and five hotel accommodations were covered by the company. There was no manufacturer influence or obligation to report.

Topics: HBM Graphics Cards Micron Nvidia Nvidia GTC 2025 Samsung SK Hynix Storage Technologies

An engineer by training, Alexandre shares his knowledge on GPU performance for gaming and creation.