GB300 NVL72: Nvidia Blackwell Ultra Upgrades to 288 GB HBM3E 17 comments

Image: nvidia

Nvidia presented a further development of the current Blackwell architecture with the Blackwell Ultra for GTC 2025. This should make the data center solution suitable for the age of AI implementation models with higher inference requirements.

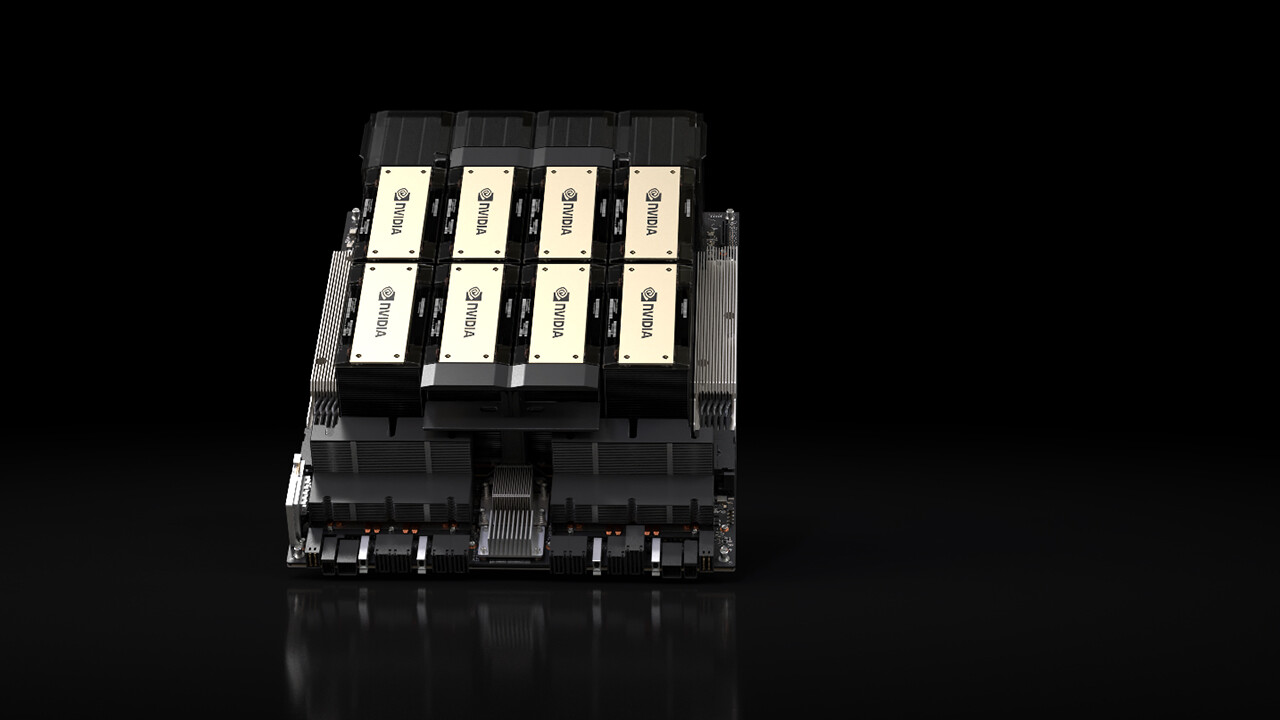

The B300 GPU is a further development of the B200 GPU presented last year, with which NVIDIA announced the Blackwell architecture and which has been used by several cloud service providers (CSPs) in the data center since the end of last year. Blackwell Ultra is based on the same architecture and therefore also includes two of these, which are connected to each other via a fabric. Focus on AI forward model inference

Blackwell Ultra was developed, among other things, to meet the higher demands of AI forward model inference, which must quickly process and spend several hundred thousand tokens per request, as they also present the request to the user on demand and thus require higher computing power.

HBM3E reaches 288 GB

To meet these requirements, Nvidia is primarily expanding the memory of Blackwell Ultra to 288 GB HBM3E, based on 192 GB HBM3E near Blackwell with the B200 GPU. Nvidia again distributes the 50% higher bandwidth memory across eight stacks around the GPU, but the respective stacks are now 12 instead of 8 DRAM chips, so a 50% higher storage density is achieved over the same area.

1.5 times the FP4 inference performance

According to NVIDIA, Blackwell Ultra is said to offer 1.5 times the FP4 inference performance compared to Blackwell. The company is talking about 15 petaflops for dense FP4, i.e., without sparsity acceleration, which allows for 30 petaflops. For the original Blackwell GPU, this figure was still 10 petaflops.

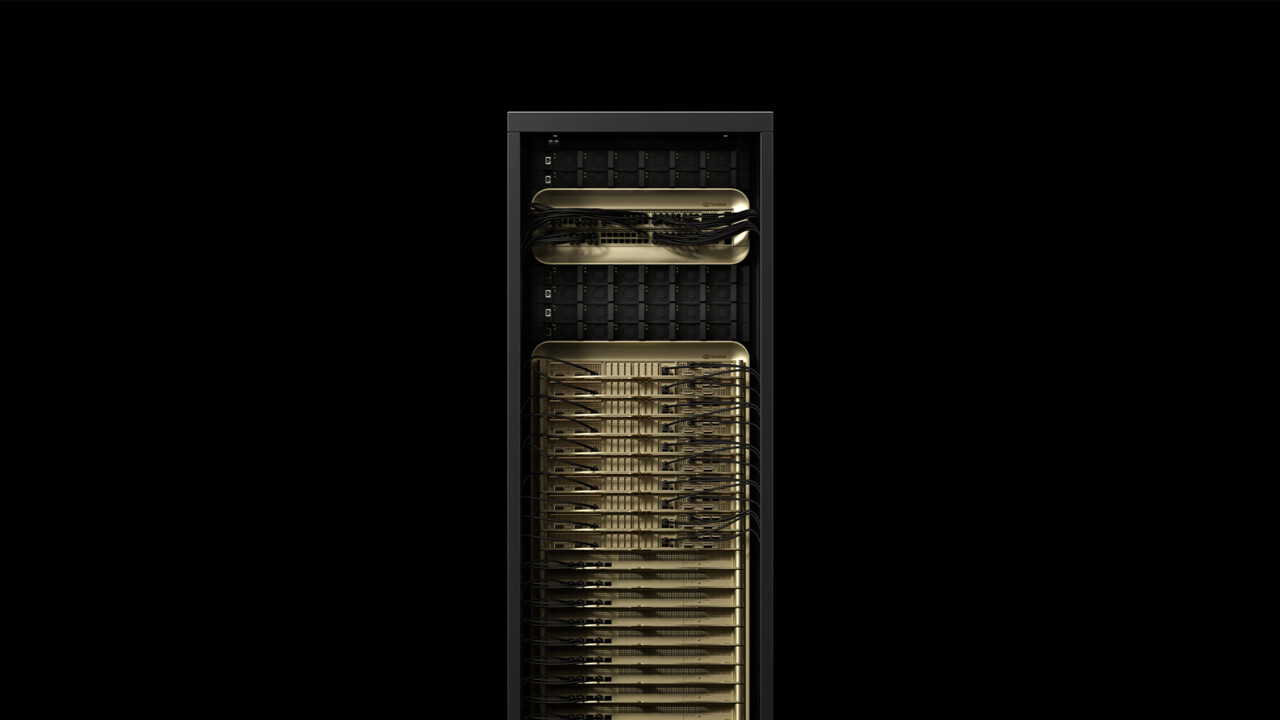

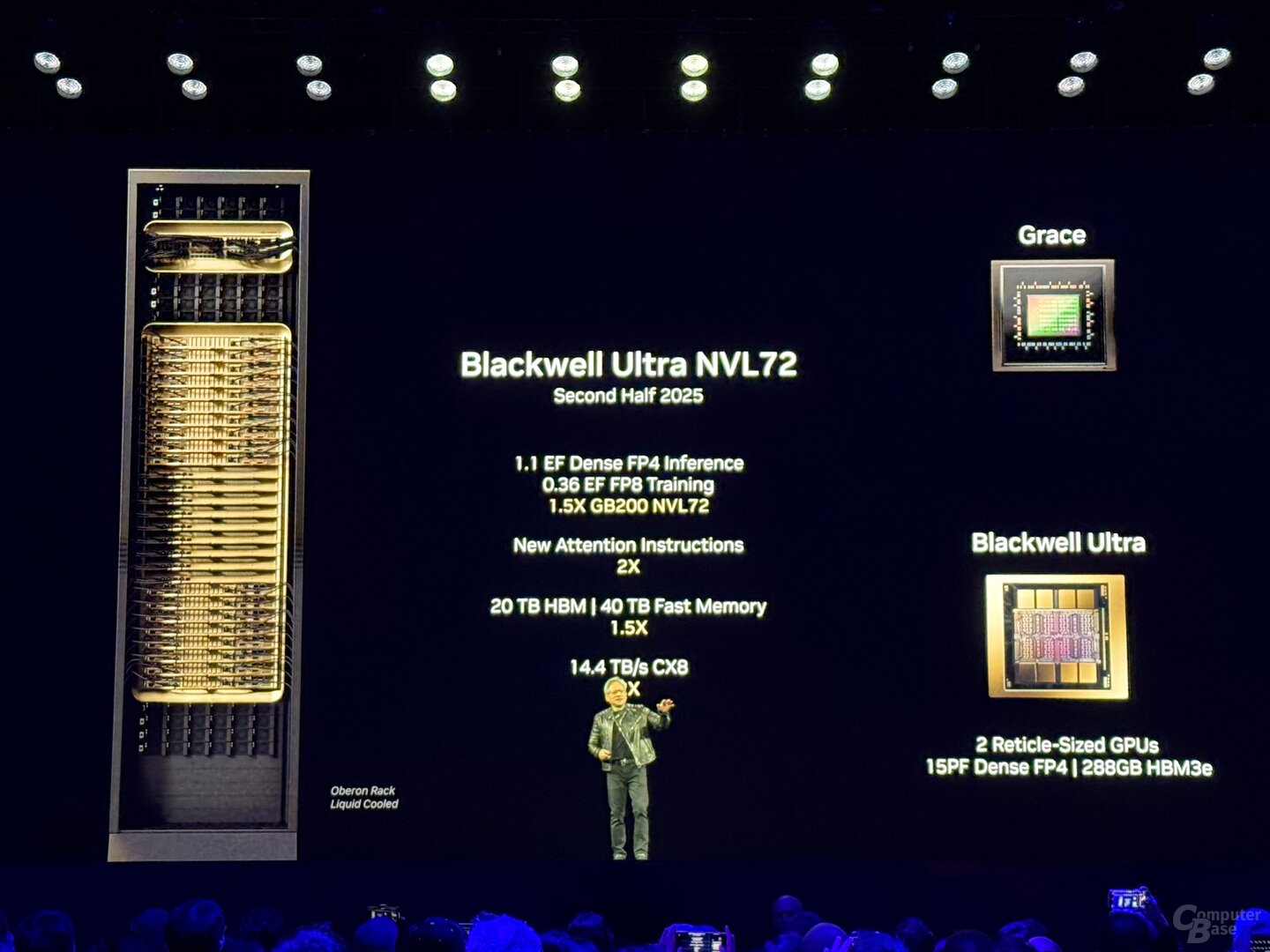

GB300 NVL72 with 72 GPUs and 1.1 exaflops

At the start of initial availability in the second half of 2025, the additional development is to be used in two NVIDIA data center solutions: the GB300 NVL72 and the HGX B300 NVL16. The GB300 NVL72 is a further development of the well-known GB200 NVL72 rack, which combines 72 Blackwell GPUs with 36 ARM-based Grace CPUs in a water-cooled server cabinet. The GB300 NVL72 is no different. Here, too, a total of 36 boards, each with two GPUs and one CPU, form the basis of the solution. The GB300 NVL72 boasts a total of 1.1 exaflops, 20 TB (20,736 GB) HBM3E, and 40 TB LPDDDR5X for the Grace processors. Compared to the previous rack, there should be no more detailed efficiency improvements beyond the compute boards.

GB300 NVL72

GB300 NVL72

The backbone that connects the individual chips to “one large GPU” is the 5th generation of NVLink, with a bandwidth of 1.8 TB/s per GPU and a total of 130 TB/s. NVLink has also been used as a multi-node interconnect since Blackwell, which was previously processed via Infiniband at 100 GB/s, so Nvidia is talking about an 18x performance increase for this specific scenario. The associated “NvLink Switch 7.2T” is also in the rack and is a no less impressive chip. NVIDIA also has the NVLink Switch manufactured in 4NP at TSMC and amounts to 50 billion transistors – that’s almost two-thirds of the Hopper H100 transistors. Up to 576 GPUs can be added to an NVLink domain. HGX B300 with x86 processor

The backbone that connects the individual chips to “one large GPU” is the 5th generation of NVLink, with a bandwidth of 1.8 TB/s per GPU and a total of 130 TB/s. NVLink has also been used as a multi-node interconnect since Blackwell, which was previously processed via Infiniband at 100 GB/s, so Nvidia is talking about an 18x performance increase for this specific scenario. The associated “NvLink Switch 7.2T” is also in the rack and is a no less impressive chip. NVIDIA also has the NVLink Switch manufactured in 4NP at TSMC and amounts to 50 billion transistors – that’s almost two-thirds of the Hopper H100 transistors. Up to 576 GPUs can be added to an NVLink domain. HGX B300 with x86 processor

With the HGX B300 NVL16, NVIDIA also offers a solution without its own ARM CPU. Due to the lack of the “G” in the name, the Grace CPU does not have this solution; instead, 16 B300 and x86 processors are used by NVLink. AMD and Intel have already jumped on the processor bandwagon in the past.

Server vendors and CSPs are on board.

According to Nvidia, Cisco, Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro want to offer a wide range of Blackwell Ultra products, later including Aivre, Asrock, Asus, Eviden, Foxconn, Gigabyte, Inventec, Pegatron, Quanta, Wistron, and Wiwynn. Cloud service providers interested in offering Blackwell Ultra include AWS, Google Cloud, Microsoft Azure, and Oracle. Blackwell-gpus in the cloud also wants to offer Coreweave, Crusoe, Lambda, Nebius, Nscale, Yotta and Ytl.

Techastuce received information about this item from NVIDIA under NDA in advance of and at a manufacturer event in San Jose, California. The cost of arrival, departure, and five hotel accommodations were covered by the company. There was no manufacturer influence or reporting obligation. The only requirement of the NDA was the earliest possible publication date.

Topics: Graphics Cards Artificial Intelligence Nvidia Nvidia Blackwell Nvidia GTC 2025 Source: Nvidia

An engineer by training, Alexandre shares his knowledge on GPU performance for gaming and creation.