Nvidia Kyber: A DGX Superpod Compresses Just a Rack 6 comments

With the Blackwell Ultra DGX Superpod, Nvidia presented a “ready-to-use AI supercomputer” with the Blackwell Ultra DGX supercomputer, which provides computing power for AI projects with 576 GPUs at 11 EXAFLOPS (FP4). However, what is still spread across four racks could soon fit into one, as demonstrated by Nvidia’s Kyber rack.

Scaling Driven at the Top

Scaling had to be done before scaling, Nvidia CEO Jensen Huang explained to the audience yesterday. What is meant is a compression of components before their width. Nvidia is leading this project forward with consistently scaled racks and has reached a preliminary peak with the Kyber rack at GTC 2025.

The Kyber rack was only indirectly a problem for the keynote; you had to pay close attention to the footnotes to find the rack’s development name “Kyber” in addition to the image of the Rubin Ultra NVL576 planned for the second half of 2027. Instead, the eye quickly fell on the gigantic numbers surrounding Rubin Ultra with a whopping 4-GPU indentation.

Kyber does everything differently

Even the front view of Kyber shown in the keynote suggested that Nvidia not only had packaged the components here, but would also have a completely different rack. Similar to the roadmap outlined through 2028, Nvidia was surprisingly open to the next generations of servers at its own GTC show and had already exhibited Kyber and the new components required for this activity only as a proof of concept. But given that Jensen Huang showed the Rubin Ultra NVL576 in this chassis on the cover, it can be assumed.

Kyber Rack Image 1 of 8

576 GPUs in a single rack

Rubin Ultra NVL576, among others, brings 144 chip packages associated with a total of 576 GPUs via multiple NVLink switches into a single rack. According to Huang, the rack has a power requirement of 600 kW. For comparison: with GB200 NVL72 (and GB300 NVL144), 132 kW, and per rack, 144 GPUs (new counting method for GB300) are divided into 72 chip packages, 36 GRACE CPUs, and nine NVLink switches.

Kyber can do without an NVLink copper cable.

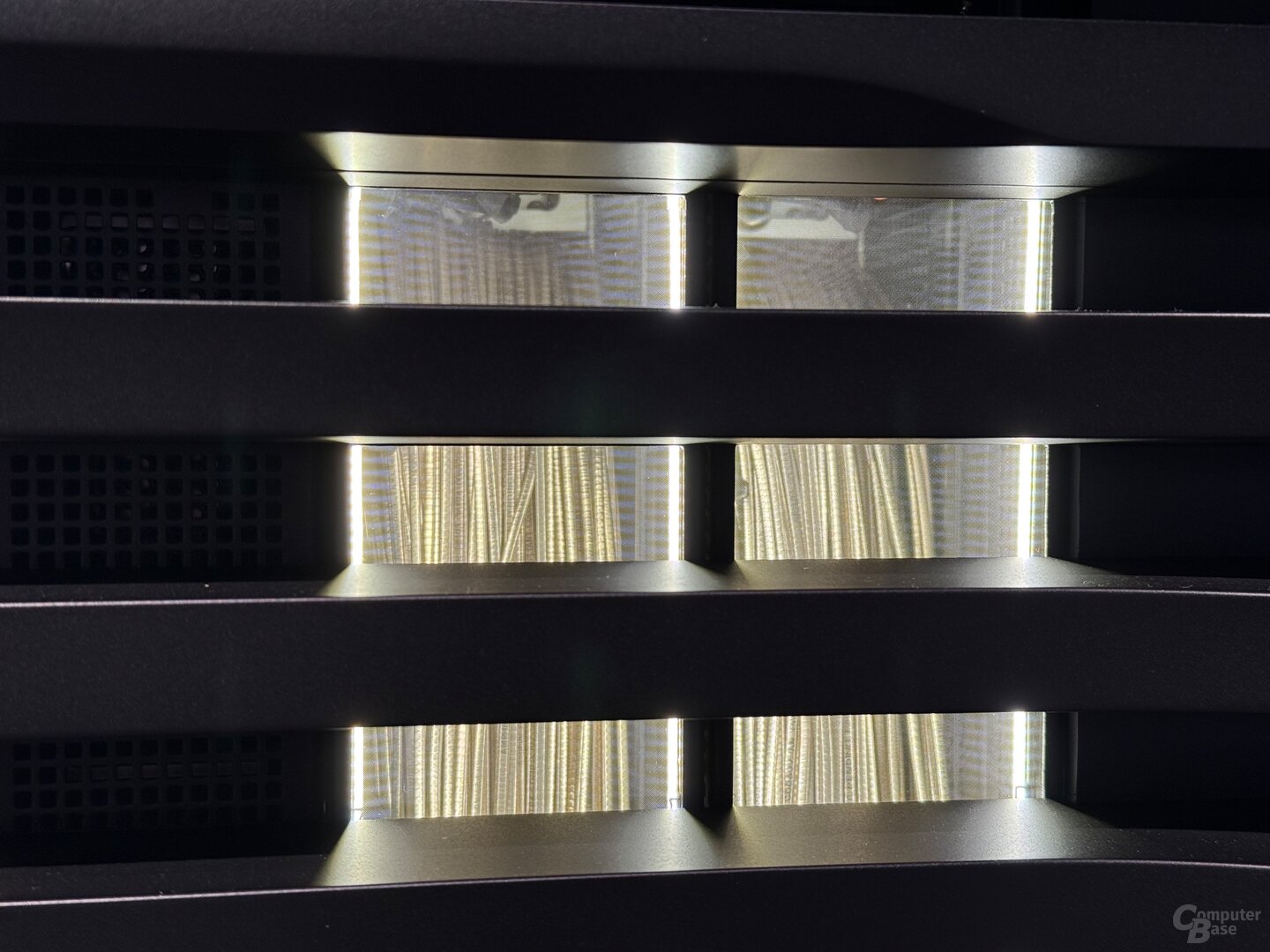

Nvidia is therefore striving for a GPU Compression Pro rack by a factor of 4x with Rubin Ultra compared to Rubin, Blackwell Ultra, and Blackwell. To achieve this, the rack structure must be completely redesigned, as Kyber impressively demonstrates. With the GB300 NVL144 and Vera Rubin NVL144, NVIDIA installs 18 compute trays with 8 GPUs and 2 CPUs per tray in the rack. Nine NVLink switches are located on top of each other between the first ten and the remaining eight compute trays. The “backbone” of the system is 3.2 kilometers of copper cables at the rear of the rack, which connect the compute trays with the NVLink switches to create “one big GPU.”

GB300 NVL72 from behind with copper cables for the NVLink switches Image 1 of 3

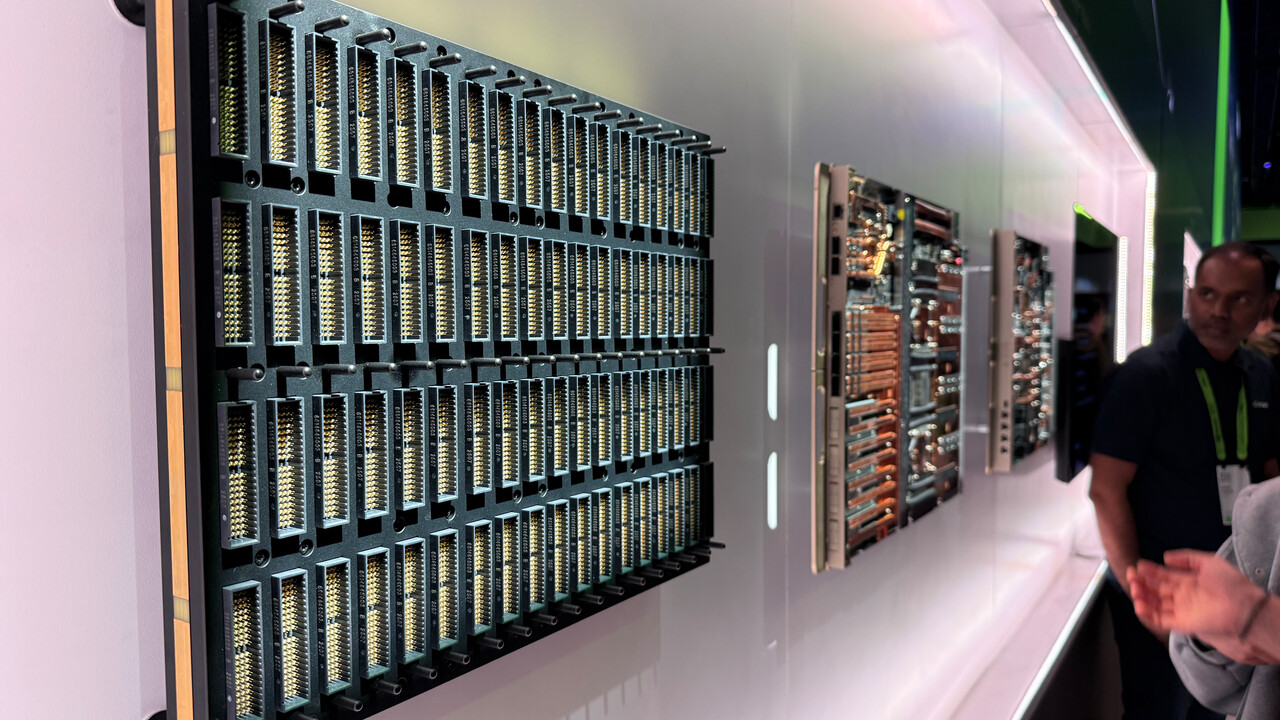

Kyber not only builds poets, but also does without those cables. Instead of trays, Nvidia relies on blades for the compute and NVLink switches, which are rotated 90 degrees like books on a shelf. Kyber can accommodate four blocks of 18 compute blades for a total of 72 compute blades. At Kyber, each compute blade has only about half the build depth compared to Blackwell trays, as NVIDIA is moving from a hybrid liquid-cooled structure to a unique fluid-cooled structure that no longer requires a classic heatsink and fan. This time, the fluid cooling truly encompasses all components—not just GPUs and CPUs, but also storage, DPU, networking, and more—so fans no longer have to blow on the boards.

Midplane PCB as a new midplane part

But where are the NVLink switches required at Kyber? This includes the second half of the rack’s build depth, which conforms to the standard dimensions directly behind the compute blades. Between the two, perpendicular to the blades, which are completed from the front and rear, is a new midfield PCB with contact points on both sides to accommodate the compute blades from the front and the NVLink switch blades from the rear with corresponding connectors. Liquid cooling also operates in this area of the rack, each with two connections for each compute and NVLink switch. The final result, in terms of GPU count, results in a rack with 144 chip packages for a total of 576 GPUs, as four Rubin Ultra GPUs form a chip package instead of two.

Building Blocks in Kyber Rack Image 1 of 3

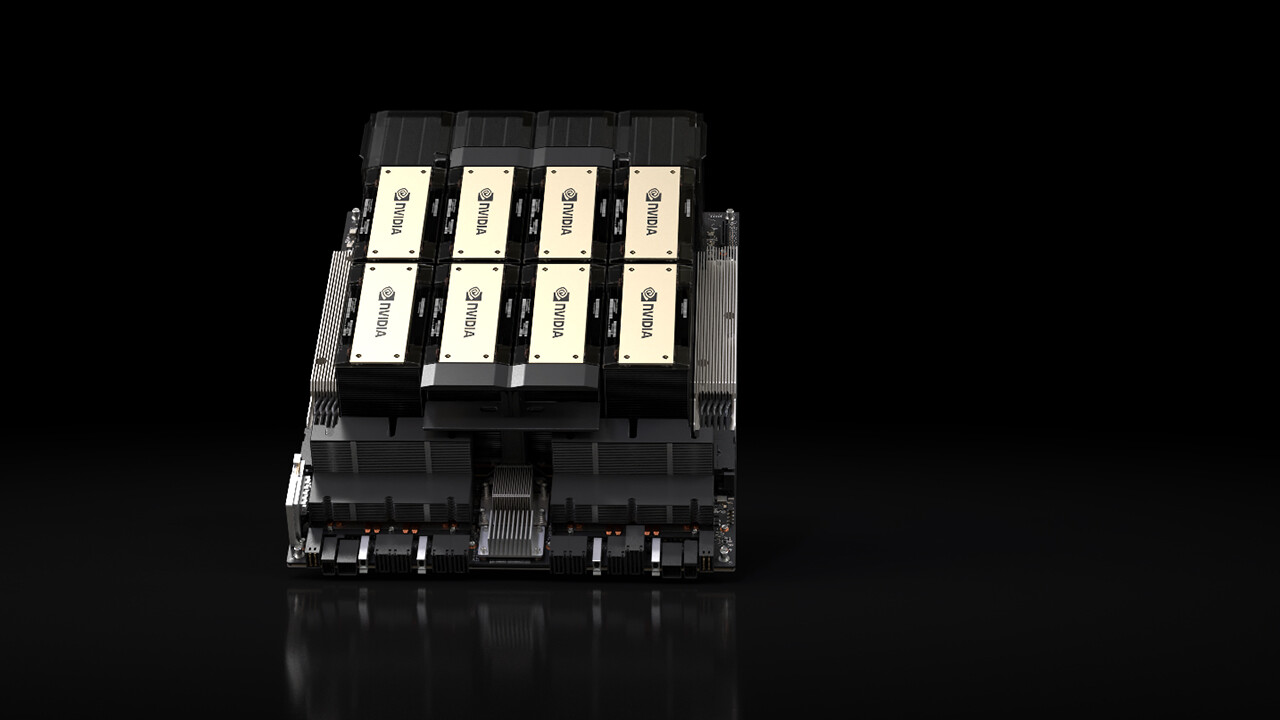

Superpod Blackwell Ultra DGX

This year, however, Nvidia customers should still be satisfied with the Blackwell Ultra DGX Superpod announced at GTC, which also offers 576 GPUs, but still distributes them across four racks with hybrid cooling. The new Superpod is marketed by Nvidia as an “AI supercomputer in a box,” which, with 576 Blackwell Ultra GPUs and 288 Grace CPUs, achieves a computing power of 11.5 exaflops for FP4. The Blackwell Ultra DGX Superpod is expected to be offered by Nvidia partners later this year.

Nvidia Blackwell Ultra DGX Superpod (Image: Nvidia)

Nvidia Blackwell Ultra DGX Superpod (Image: Nvidia)

Techastuce received information about this item from Nvidia at an event the manufacturer held in San Jose, California. The cost of arrival, departure, and five hotel accommodations were covered by the company. There was no manufacturer influence or obligation to report.

Topics: graphics cards, artificial intelligence, Nvidia, Nvidia GTC 2025

An engineer by training, Alexandre shares his knowledge on GPU performance for gaming and creation.